I was going through Ten Rules for Next Generation Data Integration (By philip russom, TDWI) and found really interesting. Follows the jist of the deck:

Data integration (DI) has undergone an impressive evolution in recent years. Today, DI is a rich set of powerful techniques, including ETL (extract, transform, and load), data federation, replication, synchronization, changed data capture, data quality, master data management, natural language processing, business-to business data exchange, and more. Furthermore, vendor products for DI have achieved maturity, users have grown their DI teams to epic proportions, competency centers regularly staff DI work, new best practices continue to arise (such as collaborative DI and agile DI), and DI as a discipline has earned its autonomy from related practices such as data warehousing and database administration.

Ten Rules for Next Generation Data Integration

1. DI is a family of techniques.

2. DI techniques may be hand coded, based on a vendor’s tool, or both.

3. DI practices reach across both analytics and operations.

4. DI is an autonomous discipline.

5. DI is absorbing other data management disciplines.

6. DI has become broadly collaborative.

7. DI needs diverse development methodologies.

8. DI requires a wide range of interfaces.

9. DI must scale.

10. DI requires architecture.

Why Care About next generation data integration (NGDI) Now?

Businesses face change more often than ever before.

Even mature DI solutions have room to grow.

The next generation is an opportunity to fix the failings of prior generations.

For many, the next generation is about tapping more functions of DI tools they already have.

Unstructured data is still an unexplored frontier for most DI solutions.

DI is on its way to becoming IT infrastructure.DI is a growing and evolving practice.

Monday, February 27, 2012

Friday, February 24, 2012

The New Analytical Ecosystem: Making Way for Big Data (By Wayne Eckerson)

I was going through Wayne Eckerson blog and find really interesting about this article.

The top-down world. In the top-down world, source data is processed, refined, and stamped with a predefined data structure--typically a dimensional model--and then consumed by casual users using SQL-based reporting and analysis tools. In this domain, IT developers create data and semantic models so business users can get answers to known questions and executives can track performance of predefined metrics. Here, design precedes access. The top-down world also takes great pains to align data along conformed dimensions and deliver clean, accurate data. The goal is to deliver a consistent view of the business entities so users can spend their time making decisions instead of arguing about the origins and validity of data artifacts.

The under world. Creating a uniform view of the business from heterogeneous sets of data is not easy. It takes time, money, and patience, often more than most departmental heads and business analysts are willing to tolerate. They often abandon the top-down world for the underworld of spreadmarts and data shadow systems. Using whatever tools are readily available and cheap, these data hungry users create their own views of the business. Eventually, they spend more time collecting and integrating data than analyzing it, undermining their productivity and a consistent view of business information.

The bottom up world. The new analytical ecosystem brings these prodigal data users back into the fold. It carves out space within the enterprise environment for true ad hoc exploration and promotes the rapid development of analytical applications using in-memory departmental tools. In a bottom-up environment, users can't anticipate the questions they will ask on a daily or weekly basis or the data they'll need to answer those questions. Often, the data they need doesn't yet exist in the data warehouse.

The new analytical ecosystem creates analytical sandboxes that let power users explore corporate and local data on their own terms. These sandboxes include Hadoop, virtual partitions inside a data warehouse, and specialized analytical databases that offload data or analytical processing from the data warehouse or handle new untapped sources of data, such as Web logs or machine data. The new environment also gives department heads the ability to create and consume dashboards built with in-memory visualization tools that point both to a corporate data warehouse and other independent sources.

Combining top-down and bottom-up worlds is not easy. BI professionals need to assiduously guard data semantics while opening access to data. For their part, business users need to commit to adhering to corporate data standards in exchange for getting the keys to the kingdom. To succeed, organizations need robust data governance programs and lots of communication among all parties.

Summary. The Big Data revolution brings major enhancements to the BI landscape. First and foremost, it introduces new technologies, such as Hadoop, that make it possible for organizations to cost-effectively consume and analyze large volumes of semi-structured data. Second, it complements traditional top-down data delivery methods with more flexible, bottom-up approaches that promote ad hoc exploration and rapid application development.

The top-down world. In the top-down world, source data is processed, refined, and stamped with a predefined data structure--typically a dimensional model--and then consumed by casual users using SQL-based reporting and analysis tools. In this domain, IT developers create data and semantic models so business users can get answers to known questions and executives can track performance of predefined metrics. Here, design precedes access. The top-down world also takes great pains to align data along conformed dimensions and deliver clean, accurate data. The goal is to deliver a consistent view of the business entities so users can spend their time making decisions instead of arguing about the origins and validity of data artifacts.

The under world. Creating a uniform view of the business from heterogeneous sets of data is not easy. It takes time, money, and patience, often more than most departmental heads and business analysts are willing to tolerate. They often abandon the top-down world for the underworld of spreadmarts and data shadow systems. Using whatever tools are readily available and cheap, these data hungry users create their own views of the business. Eventually, they spend more time collecting and integrating data than analyzing it, undermining their productivity and a consistent view of business information.

The bottom up world. The new analytical ecosystem brings these prodigal data users back into the fold. It carves out space within the enterprise environment for true ad hoc exploration and promotes the rapid development of analytical applications using in-memory departmental tools. In a bottom-up environment, users can't anticipate the questions they will ask on a daily or weekly basis or the data they'll need to answer those questions. Often, the data they need doesn't yet exist in the data warehouse.

The new analytical ecosystem creates analytical sandboxes that let power users explore corporate and local data on their own terms. These sandboxes include Hadoop, virtual partitions inside a data warehouse, and specialized analytical databases that offload data or analytical processing from the data warehouse or handle new untapped sources of data, such as Web logs or machine data. The new environment also gives department heads the ability to create and consume dashboards built with in-memory visualization tools that point both to a corporate data warehouse and other independent sources.

Combining top-down and bottom-up worlds is not easy. BI professionals need to assiduously guard data semantics while opening access to data. For their part, business users need to commit to adhering to corporate data standards in exchange for getting the keys to the kingdom. To succeed, organizations need robust data governance programs and lots of communication among all parties.

Summary. The Big Data revolution brings major enhancements to the BI landscape. First and foremost, it introduces new technologies, such as Hadoop, that make it possible for organizations to cost-effectively consume and analyze large volumes of semi-structured data. Second, it complements traditional top-down data delivery methods with more flexible, bottom-up approaches that promote ad hoc exploration and rapid application development.

Friday, February 17, 2012

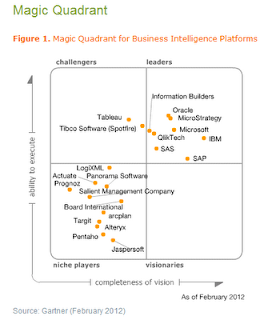

Gartner's Magic Quadrant for Business Intelligence Platform 2012

IBM-Cognos, Microsoft-SSAS, Oracle-OBIEE, SAP-Business Object, Microstrategy, Information Builder, QlikTech & SAS are in the Leader's quadrant in 2012 Magic Quadrant for BI Platforms . Tableau & Tibco-Spotfire are in the challengers quadrant and few new entrants (ALTERYX. PROGNOZ).

For more please refer: http://www.gartner.com/technology/reprints.do?id=1-196WFFX&ct=120207&st=sb

For more please refer: http://www.gartner.com/technology/reprints.do?id=1-196WFFX&ct=120207&st=sb

Sunday, February 5, 2012

The Forrester Wave™: Enterprise Hadoop Solutions, Q1 2012

Wednesday, February 1, 2012

From Business Intelligence to Intelligent Business (By Gartner)

Intelligence cannot be bought. An intelligent business develops the right culture and processes to ensure that the right information is available for people to make the right decisions at all levels of the organization.

Key Findings

# Information required for effective decisions comes from many diverse sources.

# Too much information can be as bad as too little information.

# Intelligent business develops by linking process and information integration with business strategies.

Recommendations

# Focus business intelligence (BI) efforts on delivering the right information to the right people at the right time to impact critical business decisions in key business processes.

# Change the mind-set from one that simply demands more information to one in which asking the right questions drives impactful decisions.

# Create project teams based not on data ownership but on information needs up and down the management chains and across functions to drive maximum decision impact.

Analysis

Check the websites of ERP, BI and content management vendors, and a common thread is "buy our product and receive best-practice solutions." If it were as simple as this, most of the major enterprises around the globe would be at world's best practice, because they have generally invested in data warehouses and BI tools that have become progressively more mature.

But ask a range of business leaders "Does everyone in your organization have the right information in the right format at the right time to make the best possible decisions?" and the common answer is "No." One reason is that the information required to make many decisions does not reside in any convenient databases but comes from a complex mix of sources, including e-mails, voice messages, personal experience, multimedia information, and external communications from suppliers, customers, governments and so on.

Traditionally, BI has been used for performance reporting from historical data and as a planning and forecasting tool for a relatively small subset of those in the organization who rely on historical data to create a crystal ball for looking into the future.

Providing real-time information derived from a fusion of data and analytical tools with key business applications such as call center, CRM or ERP creates the ability to push alerts to knowledge or process workers and represents a significant effectiveness impact on decision making, driving additional revenue, margin or client satisfaction. Modeling future scenarios permits examination of new business models, new market opportunities and new products, and creates a culture of "Which opportunities will we seize?" They not only see the future, but often create it.

Case studies presented in Executive Program reports highlight initiatives that are exploiting business intelligence to create intelligent businesses. Three key recommendations are:

1. Focus BI efforts on delivering the right information to the right people.

2. Change the mind-set from more information to answering the right questions.

3. Create project teams based on information needs.

Key Findings

# Information required for effective decisions comes from many diverse sources.

# Too much information can be as bad as too little information.

# Intelligent business develops by linking process and information integration with business strategies.

Recommendations

# Focus business intelligence (BI) efforts on delivering the right information to the right people at the right time to impact critical business decisions in key business processes.

# Change the mind-set from one that simply demands more information to one in which asking the right questions drives impactful decisions.

# Create project teams based not on data ownership but on information needs up and down the management chains and across functions to drive maximum decision impact.

Analysis

Check the websites of ERP, BI and content management vendors, and a common thread is "buy our product and receive best-practice solutions." If it were as simple as this, most of the major enterprises around the globe would be at world's best practice, because they have generally invested in data warehouses and BI tools that have become progressively more mature.

But ask a range of business leaders "Does everyone in your organization have the right information in the right format at the right time to make the best possible decisions?" and the common answer is "No." One reason is that the information required to make many decisions does not reside in any convenient databases but comes from a complex mix of sources, including e-mails, voice messages, personal experience, multimedia information, and external communications from suppliers, customers, governments and so on.

Traditionally, BI has been used for performance reporting from historical data and as a planning and forecasting tool for a relatively small subset of those in the organization who rely on historical data to create a crystal ball for looking into the future.

Providing real-time information derived from a fusion of data and analytical tools with key business applications such as call center, CRM or ERP creates the ability to push alerts to knowledge or process workers and represents a significant effectiveness impact on decision making, driving additional revenue, margin or client satisfaction. Modeling future scenarios permits examination of new business models, new market opportunities and new products, and creates a culture of "Which opportunities will we seize?" They not only see the future, but often create it.

Case studies presented in Executive Program reports highlight initiatives that are exploiting business intelligence to create intelligent businesses. Three key recommendations are:

1. Focus BI efforts on delivering the right information to the right people.

2. Change the mind-set from more information to answering the right questions.

3. Create project teams based on information needs.

Subscribe to:

Posts (Atom)